T = tqdm( total = total_size, unit = "iB", unit_scale = True )įor data in r.

#CRX FILE EXTRACTOR CODE#

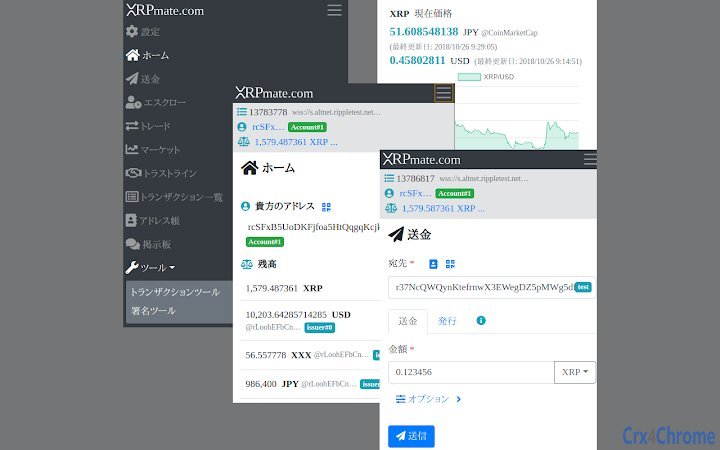

including Web Scraper is a web data extractor extension for chrome. acts as online Extractor, source code Viewer, file Converter and Downloader for Browser Extension(.crx/.xpi/.nex) - helps you extract, view. Before we begin front-end development we also want to ensure that the web app will be able to handle all features we had planned for the desktop client.Total_size = int( r. To have a manifest file with the correct fields filled in, linked from the HTML head. The thing I'm having some trouble with is determining which one is best suited for this project. My previous experience was mostly using Java. Now we are considering building a web application instead. Previously we intended to build a Java desktop client with a JavaFX UI. 64 Chrome extensions are stored in your filesystem, under the Extensions folder, inside Chromes user data directory.

Our project has a Java back-end that accesses a Neo4j database. Please do not let yourself get confused by those (as we did). This would only be a last resort solution.įor German-speakers, please note that there is a spelling error in the target elements. Our conclusion would be to use regular expressions to extract the href attributes that we need. No matter which country you are in, the CRX Extractor. When you want to download the extension of the chrome store, CRX Extractor will connect to the chrome store instead of you, extract CRX files and transfer them to you. We hypothesize that the desired elements are impossible to parse by Selenium and BeautifulSoup for whatever reason? Could the iframe tags in the DOM be a source of error (see this SO question)? What makes the parsing fail here, and is there a way to get around this problem? A website-related problem source would also explain why the SelectorGadget was unable to get a path in the first place. CRX Extractor is a way to bypass the filter, which can effectively let you avoid the blockade of the country or enterprise. Chrome could extract a path, but neither Selenium nor BeautifulSoup can work with those paths.Īfter many failed attempts to extract the elements using different classes and tags, we believe something is entirely wrong with either our approach or the website. If no program opens the CRX file then you probably don’t have an application (ie: Google Chrome) installed that can view and/or edit CRX files.

#CRX FILE EXTRACTOR HOW TO#

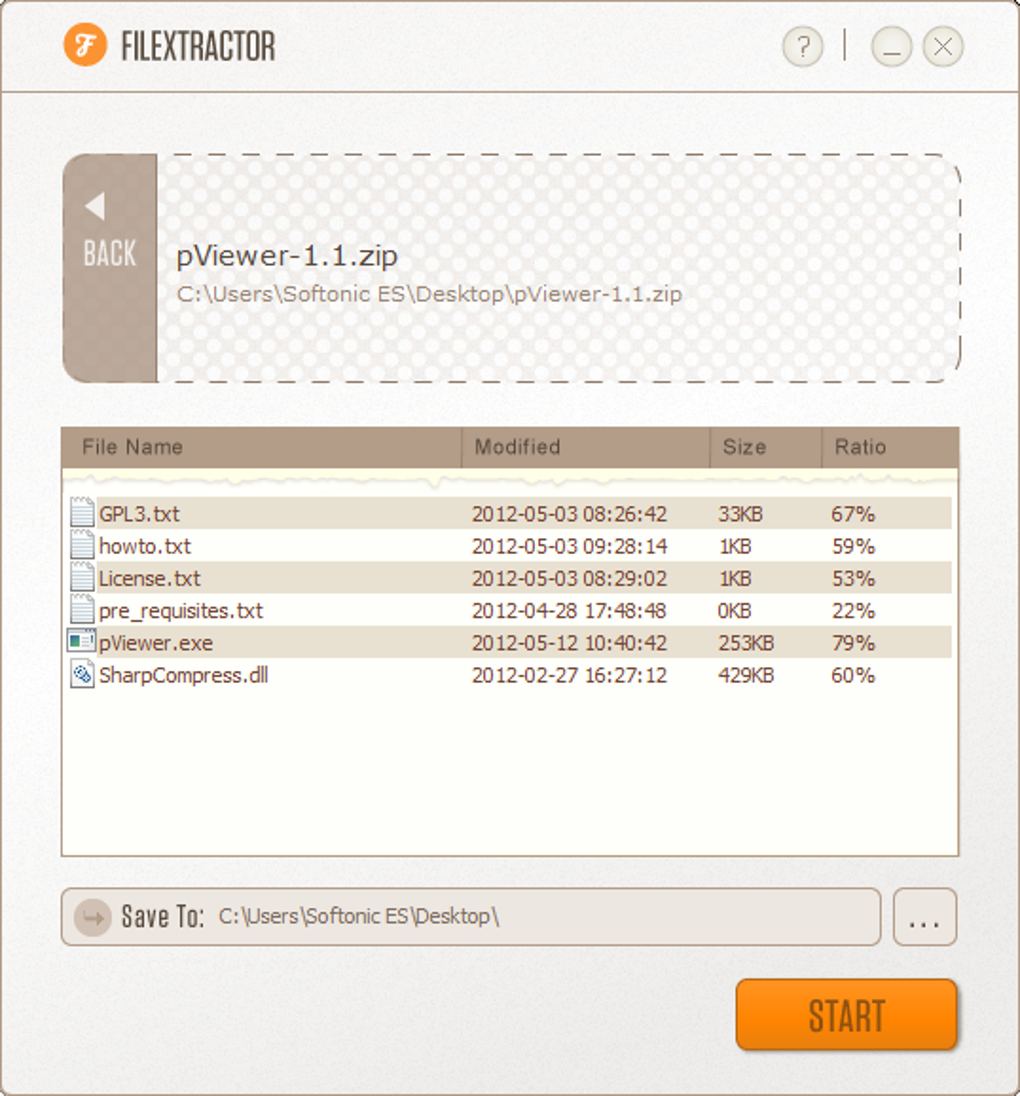

We proceeded to use a CSS path using Google Chrome (ctrl+shift+c). How To Open an CRX File: As you probably know, the easiest way to open any file is to double-click it and let your PC decide which default application should open the file. One simple way to extract the contents of a downloaded. zip, depending on the method you use to extract the files. Note that you might need to change the extension of the file to.

#CRX FILE EXTRACTOR ZIP FILE#

crx file, extracts 'magic' header and stored code signature. xpi extension package 'is simply a ZIP file containing the extension files', so you can open it just like any other. To get a Edge Extension source code, the utility parses the provided. Unfortunately, we've noticed that the browser plugin SelectorGadget had trouble providing us with a CSS path. How CRX Extractor works CRX Extractor has been created with the help of an official Google documentation describing. We usually use CSS paths and pass those to Selenium's find_elements_by_css method.

We are trying to parse href attributes from the DOM of a job website.

0 kommentar(er)

0 kommentar(er)